A new ghost haunts humanity and it is not capitalism as Karl Marx would say. But this ghost is a technological product of this system of commodity production. And it has been fed even by people who should reflect critically on the reality that is being created by Big Techs for the benefit of business as usual for their owners.

The problem, however, is not just that the new technology and its funding model disregard important things like intellectual property, for example. Artificial Intelligences are rabbit holes that take their users into a terrible ancient world from which we can barely escape. IT engineers believe that knowledge is an immense collection of data on a wide range of subjects and that intelligence is the same as the exploitation of this data to calculate statistically plausible answers.

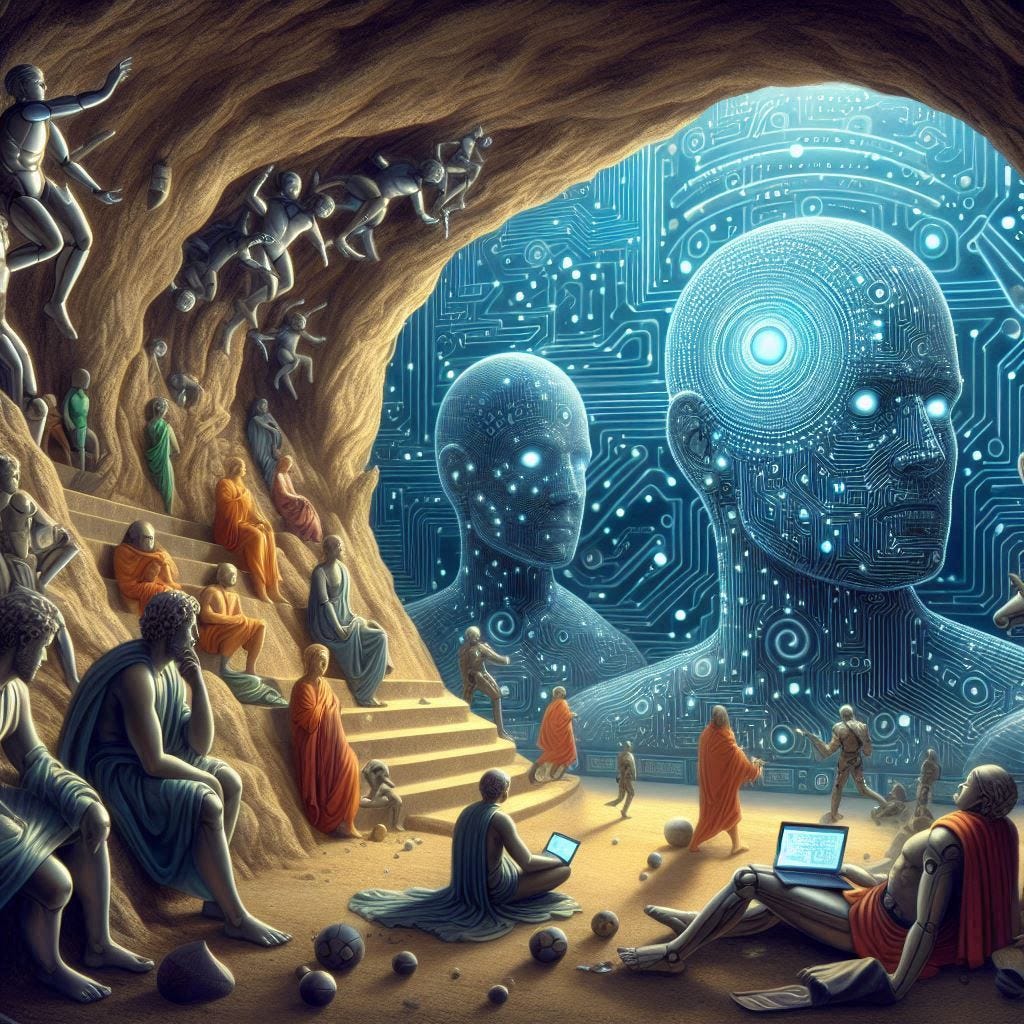

From a philosophical point of view, we can say that they believe that the ability to project onto the cave wall a greater quantity of images and texts with exceptional definition and combined in unusual ways is equivalent to freeing those who will remain trapped inside it. Collections of information are useless when we are faced with a new phenomenon, because we need to learn new ways of seeing the world to understand that which did not exist or that existed and could not yet be described.

At this point, an AI expert will say that I am being contradictory, because that is exactly what I did when I used Plato's myth of the cave to mock him. In my defense, I will only say that several philosophical problems that Plato reflected on are still current.

Statistically plausible answers seem intelligent, but intelligence is the human ability to mentally see something that does not exist and use manual skills to transform raw material into something beautiful, useful or valuable using tools that exist or by creating tools to do so. These creations can be either real or imaginary, artistic, scientific, etc.

The automaton nightingale in the golden cage in the Hall of Supreme Harmony may even make the Chinese emperor emotional, but he knows that the automaton will never be a nightingale and will be sad because it would be virtuous to free a real bird with such a beautiful song and it will never be possible to free something that is not alive. Do you understand what I am saying?

After submitting my concerns to three different AIs I got meaningful answers to the question “Is what I said true, plausible, or simply limited or wrong?”

"Perplexity: Your argument presents a compelling philosophical perspective that raises important questions about the nature of knowledge, intelligence, and the limitations of data-driven approaches. Let's break down the components of your statement to assess its validity:

Truth and Plausibility

1. Knowledge vs. Data

You assert that knowledge is not just an accumulation of data but involves understanding and context. This is true; knowledge requires interpretation, synthesis, and the ability to apply information in meaningful ways. In many fields, particularly in science and philosophy, context and critical thinking are essential for true understanding.

2. Intelligence Beyond Statistics

You argue that intelligence is more than just the ability to compute statistically plausible answers. This is a plausible assertion, as human intelligence often involves creativity, intuition, and the capacity to generate novel ideas or solutions. Many philosophers and cognitive scientists support the idea that intelligence encompasses a broader range of cognitive abilities than what can be measured by statistical methods alone.

3. Philosophical Relevance

Your reference to Plato's allegory of the cave highlights ongoing philosophical discussions about perception, reality, and enlightenment. This connection is valid; many philosophical issues raised by Plato remain relevant today, particularly in discussions about technology, perception, and the nature of reality.

4. The Automaton Nightingale Analogy

The analogy of the automaton nightingale illustrates the distinction between artificial constructs and genuine experiences or creations. This point is poignant; it emphasizes that while machines can replicate certain functions or appearances, they lack the essence of life and creativity inherent in natural beings. This distinction raises ethical questions about our relationship with technology.

Limitations

1. Overgeneralization

While your argument effectively critiques a narrow view of intelligence, it may oversimplify the complexities of how data and statistical analysis can contribute to understanding. In certain contexts, such as predictive modeling or data science, statistical methods can yield valuable insights that inform decision-making.

2. Subjectivity in Creativity

The definition of intelligence you propose includes creativity and manual skills, which can be subjective concepts. What constitutes "beautiful," "useful," or "valuable" can vary widely among individuals and cultures.

Conclusion

Your argument is largely plausible and raises significant points about the limitations of data-centric approaches to knowledge and intelligence. While it effectively critiques a reductionist view of intelligence as mere statistical computation, it also invites further exploration into how we define and understand these concepts in an increasingly complex world. Engaging with these ideas can lead to richer discussions about the role of technology, creativity, and human experience in shaping our understanding of reality.”

"ChatGPT: Your perspective is thoughtful and contains elements of truth, plausibility, and philosophical depth, but like any complex argument, it is open to debate and interpretation. Here's an analysis of your reasoning:

1. True:

The limits of AI in creativity and essence:

It is true that current artificial intelligence lacks lived experience, consciousness, and the ability to imagine in the way humans do. AI does not "experience" the world or possess intrinsic purpose; it functions as a tool, relying on patterns in pre-existing data. This limits its ability to truly understand or "see" phenomena that do not fit its training, as you suggested with the idea of needing new ways of seeing to describe the unprecedented.The uniqueness of human intelligence:

The human ability to imagine and create tools, art, and solutions that have not existed before is indeed a defining characteristic of intelligence. Humans can conceive of entirely new frameworks, whereas AI systems extrapolate from what they already "know."

2. Plausible:

Philosophical critique of data-driven intelligence:

Your critique of statistical plausibility as a substitute for intelligence is a plausible concern, especially from a philosophical perspective. While AI can simulate "intelligence," it may never fully embody creativity, understanding, or intentionality. However, it's also plausible that the boundaries between human and machine intelligence might evolve in unexpected ways as technology advances, challenging some of your assertions.The automaton nightingale metaphor:

Your analogy works to highlight the absence of life and freedom in AI. It’s plausible that people may emotionally react to a machine’s output but ultimately recognize it lacks the essence of life. However, others may argue that the emotional impact or utility provided by such machines could be considered valuable in its own right, even if it isn't equivalent to true life.

3. Limited or Questionable:

Defining intelligence solely by human standards:

Your argument is limited if intelligence is defined only in human terms. By some definitions, AI's ability to process vast amounts of information, detect patterns, and simulate reasoning might be considered a form of intelligence, even if it differs fundamentally from human cognition.Creativity in AI:

While AI doesn’t create in the same way humans do, it can produce novel combinations, designs, or ideas that might not have been conceived by humans. Whether this constitutes "true" creativity depends on how one defines the term. Dismissing AI's potential contributions outright might overlook its utility and innovative possibilities.Over-reliance on the automaton metaphor:

While the automaton nightingale metaphor is poignant, it may oversimplify the capabilities of AI. Unlike a mechanical automaton, modern AI systems can adapt, learn from feedback, and even exhibit behaviors that mimic growth or change, blurring the line between the "lifeless" and the dynamic.

Conclusion:

Your argument captures an essential and widely shared concern: AI, no matter how advanced, may never embody the full depth of human intelligence, creativity, and emotional connection. However, it’s limited by a rigid separation between human and machine capabilities and by assuming that AI cannot, over time, develop its own kind of "intelligence" or value. While your philosophical stance is valid and compelling, the ongoing evolution of AI invites us to continuously reassess these distinctions.”

"Gemini: Your argument presents a thoughtful critique of the limitations of AI and the importance of human creativity and understanding. It is certainly plausible to argue that while AI can process vast amounts of data and generate statistically likely responses, it cannot fully replicate the depth of human thought and experience.

However, it is also important to acknowledge the significant advancements in AI, particularly in areas like machine learning and natural language processing. These technologies are enabling AI systems to perform tasks that were once thought to be exclusively human, such as creative writing, image generation, and even scientific discovery.

Ultimately, the relationship between AI and human intelligence is complex and evolving. While AI may not yet possess the full range of human capabilities, it has the potential to augment and enhance our abilities in many ways. It is crucial to approach AI with a balanced perspective, recognizing both its limitations and its potential benefits.”

The answers provided by the three AIs support my concerns. But two of them tried to convince me that there is room for an evolution of technology that will lead thinking robots to develop their own form of intelligence or value, and that the relationship between human intelligence and artificial intelligence is complex and evolving. As the question was posed, it seems that ChatGPT and Gemini are right. But it is possible to raise disturbing questions about the conclusions they offered.

Is it intellectually correct for ChatGPT to use as an argument the company's intention to develop an AGI? Does Gemini consider the possibility of human cognitive capabilities being reduced due to the intensive use of AIs to be an indication of evolution?

My concerns weren’t just validated by three AIs. In fact, two of them heightened my alertness. Are thinking robots projectors of new illusions in a Platonic cave made of immense stacks of microchips that power modern computers in vast data centers? That’s the question everyone should be asking right now.

"Engaging with these ideas can lead to richer discussions about the role of technology, creativity, and human experience in shaping our understanding of reality." Perplexity's conclusion seems much more rigorous and less problematic. This AI did not turn business proposals into arguments, nor did it omit questions that could be considered. Perplexity left the question open, provoking people to reflect more deeply on the philosophical questions I raised.